Introduction

One of the most exciting advancements is Explainable AI (XAI), which aims to make AI models more transparent and understandable. In this post, I’ll explain what XAI is, why it’s important, and how it works in a fun and easy-to-understand way. So, let’s dive in!

What is Explainable AI?

XAI is a branch of AI that focuses on making machine learning models more transparent and interpretable. In other words, it helps us understand how and why AI models make certain decisions. This is important because traditional AI models are often seen as black boxes, making it difficult for humans to understand how they arrive at certain decisions. With XAI, we can gain more insight into the decision-making process of AI models.

Why is Explainable AI important?

There are many reasons why XAI is important. First and foremost, it can help build trust in AI models. If we understand how AI models make decisions, we can have more confidence in their outputs. XAI can also help with debugging and improving models. By understanding how a model makes decisions, we can identify potential biases or errors and make adjustments to improve its performance.

How does Explainable AI work?

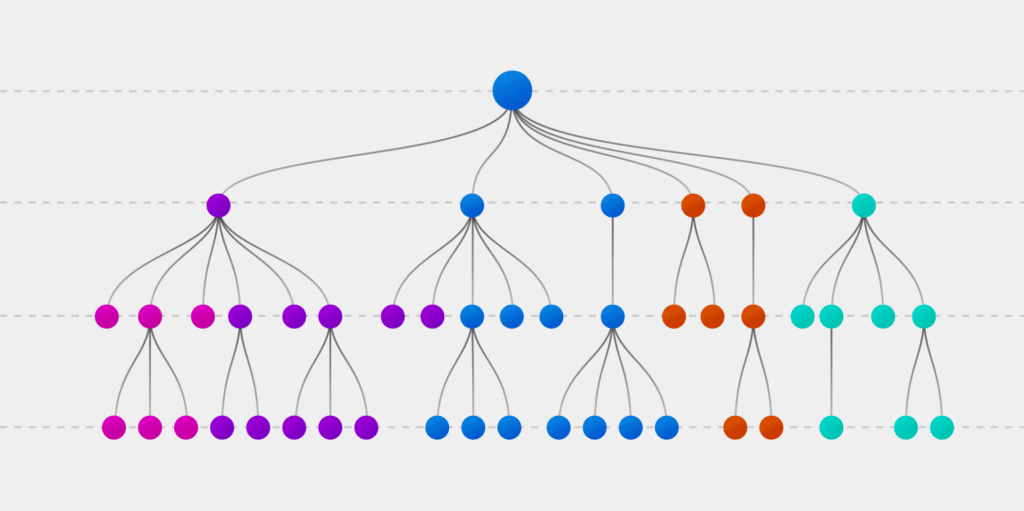

There are many different techniques for building explainable AI models. One common approach is to use decision trees, which break down the decision-making process into a series of smaller, more interpretable steps. Another technique is to use local feature importance, which identifies the features that are most important for a specific decision. Yet another approach is to use model-agnostic methods, which can be applied to any machine learning model regardless of its underlying architecture.

Example

Let’s use the famous Titanic dataset as an example to illustrate the difference between traditional AI and Explainable AI

First, let’s load the dataset and build a traditional machine learning model to predict whether a passenger would survive or not based on their age, gender, and other factors:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# Load the Titanic dataset

titanic = pd.read_csv('https://web.stanford.edu/class/archive/cs/cs109/cs109.1166/stuff/titanic.csv')

# Prepare the data

titanic = titanic.drop(['Name', 'Ticket', 'Cabin', 'Embarked'], axis=1)

titanic = pd.get_dummies(titanic, drop_first=True)

titanic = titanic.dropna()

X = titanic.drop('Survived', axis=1)

y = titanic['Survived']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train a random forest classifier

rf = RandomForestClassifier(n_estimators=100, random_state=42)

rf.fit(X_train, y_train)

# Evaluate the model

print("Accuracy:", rf.score(X_test, y_test))This code builds a random forest classifier using the Titanic dataset, which contains information about the passengers on the Titanic and whether they survived or not. The model achieves an accuracy of around 83%, which is not bad. However, the model is essentially a black box – we don’t know how it’s making its predictions or which features are the most important.

Now, let’s use Explainable AI to gain more insight into the decision-making process of the model. One way to do this is to use a technique called local feature importance, which identifies the features that are most important for a specific prediction. Here’s the code:

# Install and import the SHAP library

!pip install shap

import shap# Explain a single prediction

data_for_prediction = X_test

explainer = shap.Explainer(model, X_test)

shap_values = explainer(data_for_prediction)

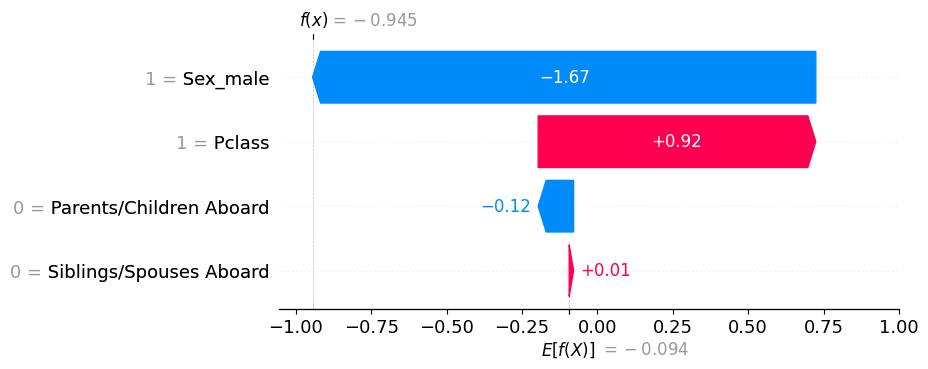

print("Example of a Male Passenger")

shap.plots.waterfall(shap_values[0])

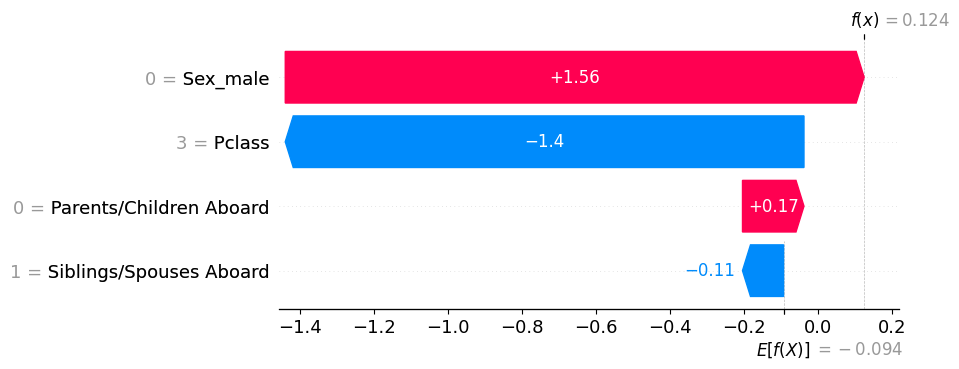

print("Example of a Female Passenger")

shap.plots.waterfall(shap_values[5])

This code uses the SHAP library to explain a single male and female prediction made by the model. We choose a random passenger (in this case, the 0th and 5th passenger in the test set), and use SHAP to calculate the feature importance values for that passenger. Then, we use SHAP’s waterfall plot to visualize the contribution of each feature to the final prediction.

And the plot shows the contribution of each feature to the final prediction for that passenger. We can see, for example, that being female had a positive impact on the prediction while having a high Fare had a negative impact.

By using Explainable AI techniques like this, we can gain more insight into the decision-making process of machine learning models and make them more transparent and trustworthy.

You could also follow the code and clone it through my github repo here.

Conclusion

Explainable AI is an exciting area of research that has the potential to make AI models more transparent, interpretable, and trustworthy. By using techniques like decision trees and local feature importance, we can gain more insight into the decision-making process of AI models. As a data scientist, I’m thrilled to be working in a field that constantly pushes the boundaries of what’s possible with AI. So, if you’re interested in learning more about XAI, don’t hesitate to dive in!